Look for other pages on the website that appear similar or have equally high relevancy scores for the same keywords.

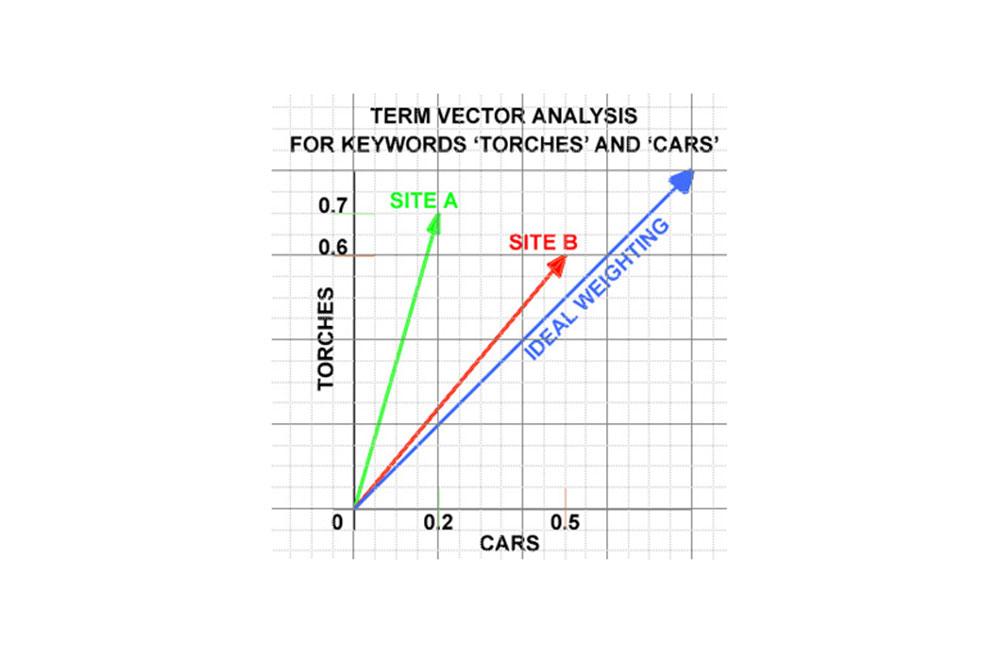

Having established the relevancy of the subjects and objects in each web page, the next step is to look for other pages on the website that appear similar or have equally high relevancy scores for the same keywords.

Part of this process investigates the use of synonyms to test how often the same or semantically similar words are used. This builds an index of semantics as the use of synonyms will help give the subject or object more meaning.

This can result in a higher ranking for a given page that may not even contain an exact match for the search keywords in question. This is because the derived latent semantics discovered on this page may be more relevant.

How Latent Semantic Indexing affects you: The better your description of the subjects and objects within each sentence, the more accurately the search engine can pin down what your site is about and hence determine the most relevant page. This will vastly increase the number of appropriate hits you will receive from the visitors you aim to attract.

However, while varying your vocabulary is good, beware of using words in an unusual context, even if it is grammatically correct to do so, as it may skew your results. It is often useful to relate an object being written about to senses or visual images – especially in direct copy writing – but pick your words carefully.

For example, a page describing a children’s ABC poster recently stated that ordering through the company’s online shop was “a piece of cake”. Shortly afterwards the site statistics started showing visitors who had been searching for information about cake decorating. An alternative way of putting it, without losing the informal tone or diluting the relevancy of the page, might have been “ordering is as easy as ABC”.